Picture This: Developing Storm Surge Visualization

When a tropical storm or hurricane develops in the open ocean, the National Hurricane Center, known as NHC, issues advisories that anticipate the track and intensity of the wind field. These advisories predict when and where the hurricane is expected to make landfall, even when the storm is far away from the coast.

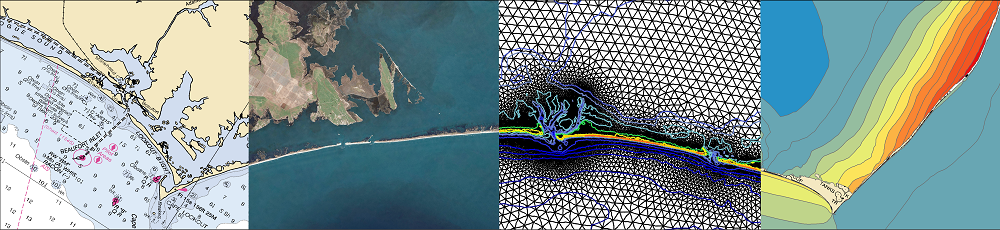

This information serves as an input for the ocean model, which then predicts the water levels or storm surges, and wave heights created by these winds at various locations along our coastline for the coming days. These results will convey a greater meaning to the end user when visualized properly. The chief objective for our project is to improve the communication of these model outputs to the end-user by producing them in popular file formats like that of GIS based shapefiles and KMZ files used in Google Earth.